Train a Mask R-CNN model on your own data

Get started with object detection and segmentation.

Posted on

Computers have always been good at number crunching, but analyzing the huge amount of data in images still brought them to their knees. Until recently that is, when libraries for graphics processing units were created to do more than just play games. We can now harness the raw power of thousands of cores to unlock the meanings behind the pictures.

Using your data

We’re going to be working with an example shape dataset, which has different sizes and colors of circles, squares, and triangles on randomly colored backgrounds. I’ve already went ahead and created a COCO-style version. If you want to learn how to convert your own dataset, take a look at the previous article.

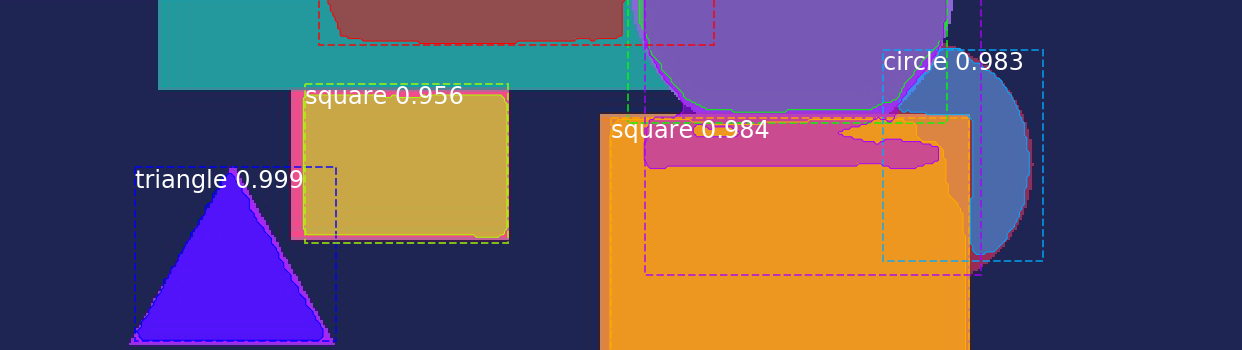

This time our focus will be to automatically label all the shapes in an image and find out where each of them are, down to the pixel. This type of task is called “object segmentation”. During your exploration of computer vision you may have also come across terms like “object recognition”, “class segmentation”, and “object detection”. These all sound similar and can be confusing at first, but seeing what they do helps clear it up. Below are examples of what kind of information we get from each of the four types. Tasks become more difficult as we move from left to right.

Object recognition tells us what is in the image, but not where or how much. Class segmentation adds position information to the different types of objects in the image. Object detection separates out each object with a rough bounding box. And finally, the hardest of the four, and the one we’ll be training for, object segmentation. It gives every shape a clear boundary, which can also be used to create the results from the previous three.

With a simple dataset like the one we’re using here, we could probably use old school computer vision ideas like Hough (pronounced Huff) circle and line detection or template matching to get pretty good results. But by using deep learning we don’t have to change our approach much to get the same type of results on nearly any type of image dataset. And all without having to think about which exact features we’re looking for. It’s almost magic.

What is MASK R-CNN?

Before we jump into training our own Mask R-CNN model, let’s quickly break down what the different parts of the name mean. We’ll start from right to left, since that’s the order they were invented.

“NN”s are neural networks. They’re an idea inspired by how we imagined biological neurons worked. A neural network is a collection of connected neurons and each neuron outputs a signal depending on its inputs and internal parameters. When we train a neural network, we adjust neuron internal parameters to create the outputs we expect.

The “C” stands for “convolutional”. CNNs were designed specifically for learning with images, but are otherwise similar to standard neural networks. They learn filters that slide (“convolve”) across and down images in small sections at time, instead of going through the entire image at once. CNNs use less parameters and memory than regular neural networks, which allows them to work on much larger images than a traditional neural network.

Plain CNNs are good at object recognition, but if we want to do object detection we need to know where things are. That’s where the “R”, for “region” comes in. R-CNNs are able to draw bounding boxes around the objects they find. Over time there have been improvements to the original R-CNN to make them faster, and as you might expect they were called Fast R-CNN and Faster R-CNN. Faster R-CNN adds a Region Proposal Network at the end of a CNN to, you guessed it, propose regions. Those regions are then used as bounding boxes if an object is found inside them.

And finally the “Mask” part of the name is what adds pixel level segmentation and creates our object segmentation model. It adds an additional branch to the network to create binary masks which are similar to the ones we make when annotating images.

Okay, that’s a short overview of what the different parts mean and do. You can find more information on each of them in the References and Resources below. Now let’s get started with actually training our own version of Mask-RCNN.

Get Your Computer Ready

To run the examples you’re going to need an Ubuntu 16.04 system with a recent nvidia graphics card. I was able to train a small part of the network using a GeForce 940M with only 2GB of memory, but you’re better off trying to use an nvidia card with 11GB of memory or more. If you don’t have access one of those you can get started with Amazon Web Services or Google Cloud.

To make sure we’re all on the same page we’ll be using Docker to run everything. Docker uses scripts to create copies of systems, so you don’t have to worry about installing all the little things yourself. But before we can benefit from having things set up automatically for us, first we need to get our host system ready. This part can be a bit of a pain, but it’ll be worth it, I promise.

After installing Ubuntu 16.04, we need to install nvidia graphics drivers and CUDA (a platform for parallel computing). Start by opening a terminal and running the following commands to install the graphics drivers.

sudo add-apt-repository ppa:graphics-drivers/ppa sudo apt-get update sudo apt-get install nvidia-graphics-drivers-390

Then continue with installing CUDA.

wget https://developer.nvidia.com/compute/cuda/9.1/Prod/local_installers/cuda-repo-ubuntu1604-9-1-local_9.1.85-1_amd64 sudo dpkg -i cuda-repo-ubuntu1604-9-1-local_9.1.85-1_amd64.deb sudo apt-key add /var/cuda-repo-<version>/7fa2af80.pub sudo apt-get update sudo apt-get install cuda-toolkit-9-1 cuda-libraries-dev-9-1 cuda-libraries-9-1

Now we have to install Docker, Docker-Compose, and Nvidia-Docker.

wget -O get_docker.sh get.docker.com chmod +x get_docker.sh sudo ./get_docker.sh sudo apt-get install -y --allow-downgrades docker-ce=18.03.1~ce-0~ubuntu sudo usermod -aG docker $USER

sudo curl -L https://github.com/docker/compose/releases/download/1.21.0/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add - distribution=$(. /etc/os-release;echo $ID$VERSION_ID) curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list sudo apt-get update sudo apt-get install nvidia-docker2 sudo pkill -SIGHUP dockerd

Whew, that was a lot of commands to run. Hopefully everything installed okay and now we can actually start running our Mask R-CNN system. Restart your computer to make sure everything got applied before continuing.

Start Exploring

Download and extract deep-learning-explorer. Inside you’ll find a mask-rcnn folder and a data folder. There’s another zip file in the data/shapes folder that has our test dataset. Extract the shapes.zip file and move annotations , shapes_train2018 , shapes_test2018, and shapes_validate2018 to data/shapes.

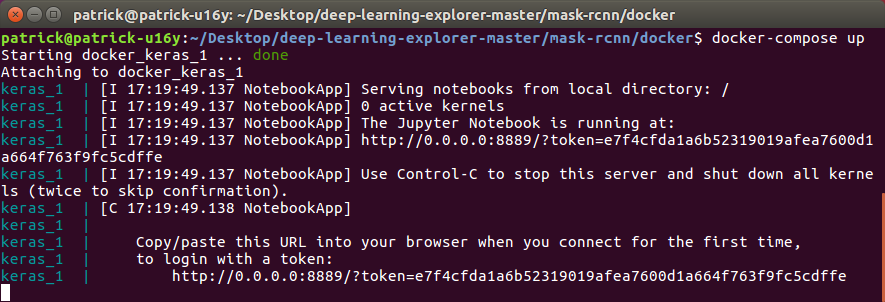

Back in a terminal, cd into mask-rcnn/docker and run docker-compose up. When you run this command the first time Docker will build the system from scratch, so it may take a few minutes to get ready. Every time after that though it will be ready almost immediately. Once it’s ready you should see something like this:

Copy-and-paste that last line into a web browser and you’ll be in Jupyter Notebook. Go to home/keras/mask-rcnn/notebooks and click on mask_rcnn.ipynb. Now you can step through each of the notebook cells and train your own Mask R-CNN model. Behind the scenes Keras with Tensorflow are training neural networks on GPUs. If you don’t have 11GB of graphics card memory, you may run into issues during the “Fine-tuning” step, but you should be able train just the top of the network with cards with as little as 2GB of memory.

The reason we can get fairly good results without having to spend days or weeks training our model, and without having thousands of examples, is because we copied weights (internal neuron parameters) from training done previously on the real COCO dataset. Since most image datasets have similar basic features like colors, and patterns, data from training one model can usually be used for training another. Copying data this way is called Transfer learning.

If you scroll to the bottom of the notebook you’ll notice that we only predict the right shape about 37% of the time. You can help make the model better by increasing the STEPS_PER_EPOCH up to 750 (the total amount of training samples) and running for 5 or more epochs.

During or after training, you can look at some graphs to see how things are going using TensorBoard. We’ll need to log into the Docker container we just started and run TensorBoard before we can access it in our web browser. In a terminal, run docker ps. This will show you all running containers. Use the first two characters of the CONTAINER ID to start a bash shell inside the Docker container training our model. For example, of our ID was d5242f7ab1e3 we would log in using docker exec -it d5 bash. Now that we’re in our container, run tensorboard --logdir ~/data/shapes/logs --host 0.0.0.0 and you should be able to visit http://localhost:8877 to access TensorBoard.

Now you’re ready to train a Mask R-CNN model on your own data. Let me know what other kinds of models you think would be interesting to explore.

References and Resources

pycococreator – transform your data

- R-CNN (arxiv)

- Fast R-CNN (arxiv)

- Faster R-CNN (arxiv)

- Mask R-CNN (arxiv)

- Stanford CS class notes

Thanks for the tutorial, but this isn’t running it on our own data at all, it’s just running it on another set besides COCO. A tutorial on how to format our own data and modify the loading functions to handle it would is more tricky and thus would be more useful.

If you have already annotated your dataset and can output the annotations into binary masks you can try to use pycococreator to create a COCO-style dataset, just like the shapes example. After converting to COCO-style you won’t need to modify loading functions.

https://patrickwasp.com/create-your-own-coco-style-dataset/

You’re saying to use the coco.py directly on a dataset created that way? I might be mistaken here, but it looks like it uses the coco api in the load_coco() function to look up image attributes specific to the COCO dataset. Of course even the CocoConfig class has NUM_CLASSES = 80 + 1, which would need to be changed, but it looks like that’s only one of many changes that need to be made. Am I incorrect and overcomplicating this? Thanks.

Once you create your own COCO-style dataset, you can train Mask R-CNN without having to do much else. Take a look at the notebook in the explorer. You’re right that you will have to set the amount of classes, but that shouldn’t be too much work. Just something like this:

Ah sorry yeah, I misunderstood the coco api functionality. I found that you also need to drop the fully connected layer in the pretained coco weights. Thanks for the data tool by the way it looks like it’s working well! Seems to be training with loss going down, although I keep getting the error:

Traceback (most recent call last):

File “/root/anaconda3/lib/python3.6/site-packages/mask_rcnn-2.1-py3.6.egg/mrcnn/model.py”, line 1695, in data_generator

use_mini_mask=config.USE_MINI_MASK)

File “/root/anaconda3/lib/python3.6/site-packages/mask_rcnn-2.1-py3.6.egg/mrcnn/model.py”, line 1210, in load_image_gt

mask, class_ids = dataset.load_mask(image_id)

File “coco.py”, line 247, in load_mask

image_info[“width”])

File “coco.py”, line 306, in annToMask

rle = self.annToRLE(ann, height, width)

File “coco.py”, line 291, in annToRLE

rles = maskUtils.frPyObjects(segm, height, width)

File “pycocotools/_mask.pyx”, line 293, in pycocotools._mask.frPyObjects

TypeError: Argument ‘bb’ has incorrect type (expected numpy.ndarray, got list)

Does bb just stand for bounding boxes and there’s an error since I only had masks?

Thanks! I missed looking at that notebook you created which includes all the modifications I didn’t initially realize

Hi,

I am right at that spot, where I have my own coco-style dataset (followed your tutorial) and I want to feed that into the Mask R-CNN. I don’t know how I can load the images and annotations. Does the coco.py work out of the box? I am not good at python, could you perhaps tell me how I could load my dataset?

The docker installation did not work for me due to the broken dependencies, and I am not sure if it can run without the docker installation, I do not have a folder called “home/keras” for one thing, so the code does not work.

What kind of errors are you getting? Post a gist of the output. Once you get Docker running, it will create a “/home/keras” directory for you (inside the docker environment).

Hi, in the Docker installation section, after I run

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update

I got error message like

E: Type ‘<!DOCTYPE’ is not known on line 1 in source list /etc/apt/sources.list.d/nvidia-docker.list

E: The list of sources could not be read.

I just wonder how to solve this problem? Thanks.

run

cat /etc/apt/sources.list.d/nvidia-docker.listYou should see something like:

If you see something else, edit the file to match the above.

The data/shapes/annotations folder contains 3 json files – separately for train, test and validate datasets. But your previous post on “Create your own COCO-style datasets” demonstrates how to annotate the train image dataset alone to produce a instances_shapes_train2018.json file. So, do we need to annotate the test and validate datasets too for running mask-rcnn.ipynb code ?

Yes, you would want all three, train, validate, and test datasets annotated. You can repeat the process shown for the train dataset to create the other two. The test dataset is only used to evaluate the model after it has been trained. The validation dataset helps you decide when your model has finished training, and prevents over-fitting.

Hello,

I’m trying to use Maskrcnn on my own annotated radar data with 3 bands (image, bounding box and mask), the images have different sizes. I want to know how can I start to train my model from my annotated data set and not from pre-trained weights ? (in my case, its radar images different from the COCO images database)

Thank you

Hi Anna,

If you don’t want to use pre-trained weights, just ignore the block with

inititalize_weights_with = "coco"But I recommend using the initial weights as your first try. There are many low-level features that are common across all visual datasets.

@waspinator

i’am trying to follow the nucleus sample, For the data set needed it’s juste a png images and png Masks for each image?

If you are using Mac OS X, you can use RectLabel.

An image annotation tool to label images for bounding box object detection and segmentation.

https://rectlabel.com

Key features:

– Drawing bounding box, polygon, and cubic bezier

– Export index color mask image and separated mask images

– 1-click buttons make your labeling work faster

– Customize the label dialog to combine with attributes

– Settings for objects, attributes, hotkeys, and labeling fast

– Search images whose labels include keywords

– Layer order for overlapped boxes

– Quick zoom to existing boxes

– Support the PASCAL VOC format

We provide python code to connect to the Mask R-CNN in Tensorflow.

https://rectlabel.com/help#tf_record

Can you explain how the model is evaluated? I understand it has something to do with getting predictions that have an IoU of over 0.5? Asking because the prediction looked pretty good, but only had a score of 0.24

Hi Patrick! Just to check, could you explain this block of code and what it does?

predictions =\

extra_utils.compute_multiple_per_class_precision(model, inference_config, dataset_test,

number_of_images=51, iou_threshold=0.5) #RY: changed number_of_images param to 51 (size of test set)

complete_predictions = []

for shape in predictions:

complete_predictions += predictions[shape]

print(“{} ({}): {}”.format(shape, len(predictions[shape]), np.mean(predictions[shape])))

print(“average: {}”.format(np.mean(complete_predictions)))

I don’t quite get what it’s evaluating, and how it goes about doing it. Thank you!

Hi John,

In general, that part is evaluating object detection by comparing the ground truth label with the predicted one. If the intersection over union is greater than 50%, it’s marked as a success. It splits up predictions by class (i.e. square, triangle, circle), and prints the average detection rate for the number of images used.

Was there something specific that was unclear?

Oh so to clarify, for the average prediction rate, it counts all the predictions, and then returns (Number of predictions with IoU over 0.5 / Total number of predictions)?

Is confidence considered? I.e. in the demo, numbers were printed with the bounding box, and I assume they were confidence and not IoU. Is this information used?

Thank you so much

The numbers shown on the image are confidence scores, but running the “evaluate” function looks at IoU > 0.5 to determine if the object was detected. The confidence has to be > 0.7 for it to be detected in the first place though.

dear friend

I have my own dataset that consist of Satellite Fotos(30000) and each image has corresponding ground truth (mask) that each mask has only one class (Edge Buildings )

how can I can use your script to get annotations automatic for each mask(Json file)or is there any solution whithout to taking long time ?

best regard